10.1 Lecture slides

10.2 Introduction

The goal of today’s assignment is to help you to understand both the potential and the limits of generative LLMs as tools for social science research. Rather than using large language models to improve performance on tasks that we have already attempted using other methods – such as classification, scaling, topic modelling, etc – we will instead use them to generate text that can be used for downstream applications.

Recent research has demonstrated that large language models can be used as proxies for human populations in social science research, at least to the extent that when one prompts the model to take on the persona of an individual with a given set of background characteristics, the model will respond to survey prompts in a way that closely mimics the distribution of responses from real people with those characteristics.

We will use a large language model (LLM) to simulate open-ended survey responses from fictional individuals with different demographic profiles. You will prompt the model to generate a paragraph in the first person, describing the individual’s political beliefs. You will then analyze the generated text using the methods you have learned in previous weeks on the course.

10.3 Packages

10.4 Gemini API

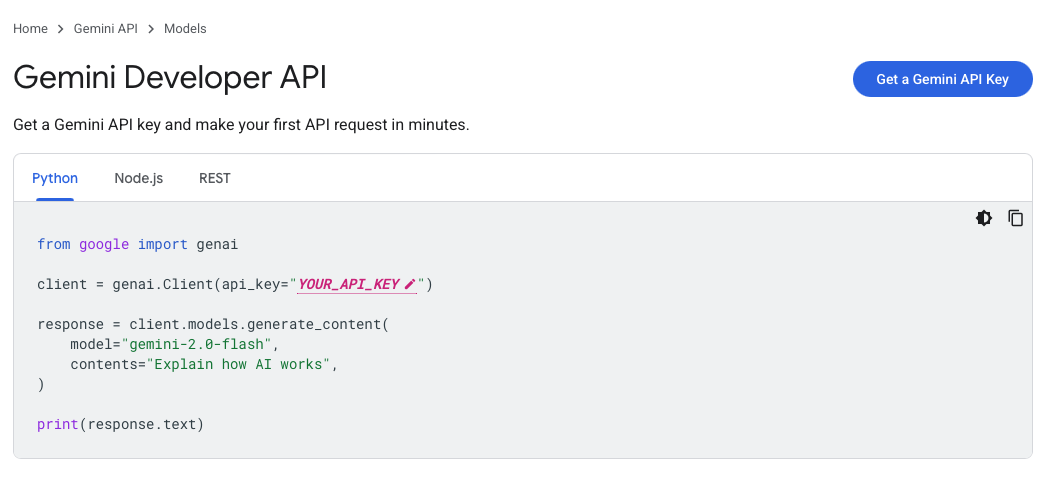

We will use Google’s Gemini model for this exercise.1 In order to use the Gemini API to make calls to the model, you will need to first register for access to the Gemini Developer API, which you can do by following this link (you will need a Google account). Once you have followed that link, you should see a screen like this:

1 Note that we could use essentially any LLM for this problem set. I have opted to use Gemini here because it is reasonably easy to generate the relevant API key, the Gemini class of models are competitive with other leading LLMs, and – crucially – it also comes with a generous free usage tier (with the downside that your data is used to improve the model).

Click “Get a Gemini API key”, then on the next screen click “Create API key” and follow the steps to generate your API key.

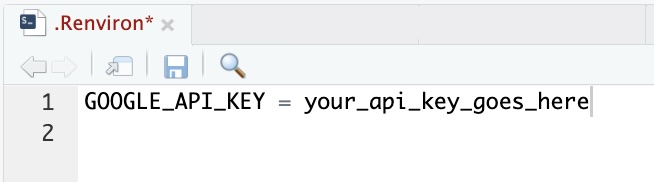

Once you have generated your API key, you will need to save it to the GOOGLE_API_KEY environment variable in your .Renviron file. For an easy way to find this file, use the following code:

You then need to edit the .Renviron so that it looks like this:

Then save the file and restart R.2

2 Note that if you struggle to find/edit the .Renviron file, do not despair. You can provide your api key directly to the chat_gemini() function we use below (e.g. via chat_gemini(api_key = "your_api_key")) but this is not usually considered best practice because it exposes your API key directly in your scripts, which can accidentally lead to it being shared publicly (e.g., if you push your code to GitHub). Storing your API key securely in the .Renviron file keeps it private and ensures your scripts remain safe for sharing and collaboration.

10.5 Prompting the model

We will begin by getting Gemini to generate synthetic responses about a hypothetical person’s political opinions. To do this, we will use a new R wrapper for the Gemini API, which is available via the ellmer package. This wrapper provides a convenient interface for sending prompts to the model and retrieving its responses.

Below is an example of how to construct and send a prompt. The goal is to simulate a paragraph written in the first person by a fictional individual with a specific demographic profile. The model is instructed to avoid describing the person’s background and instead focus on their political beliefs, values, and the issues they see as most important in the UK today.

In this code, there are three main steps:

- First, we define the prompt, the text that we want to use to send to the LLM

- Second, we define a chat object using the

chat_geminifunction. Here we are telling the API which version of gemini we wish to use. - Finally, we send the prompt to the API by using a

chat()function which is attached to ourmy_chatobject.

- Run the code below to generate a single example and inspect the response:

Reveal code

# Define the prompt to send to the model

prompt <- "Write a short paragraph in the first person,

explaining your political beliefs.

You are a 38-year-old man from the UK

with a highest qualification of postgraduate education.

You voted for Labour in the last election.

Do not describe yourself or your background --

focus only on your political beliefs.

In particular, describe the values that guide your

beliefs and the issues you think are most

important facing the country today."

# Set up a chat object for interacting with the model

my_chat <- chat_gemini(model = "gemini-2.0-flash-lite")

# Sent the prompt to the model using the chat() function

output <- my_chat$chat(prompt)I believe in a society that prioritizes fairness and opportunity for everyone.

My values are rooted in social justice and a strong belief in the collective

good. I think it's crucial that we have robust public services like the NHS and

invest heavily in education and infrastructure to level the playing field.

Currently, I'm most concerned about economic inequality, the climate crisis,

and the erosion of social cohesion. Addressing these issues requires bold

policies focused on wealth redistribution, sustainable development, and

fostering a more inclusive society where everyone feels they belong.The

ellmerchat()function retains the conversation’s context, which means that it will remember your previous interactions each time you use it for a new call. If instead we want thechat()function to forget previous interactions and start the conversation from scratch with each call to the LLM, we need to create a new chat object for each interaction.This is a bit annoying for our purposes, so instead we are going to create a function which will initialise a new chat object whenever we make a new call to Gemini.

Use this function to send our prompt from above to this function instead. Note that the text will not in general be exactly the same as this is a separate call to the LLM and therefore different text may be generated due to the probabilistic nature of the text-generation process.

Reveal code

I believe in a society built on fairness and opportunity for all. My values are

rooted in social justice, equality, and a strong sense of community. I support

a robust welfare state to protect the vulnerable and ensure everyone has access

to essential services like healthcare and education. I think the most pressing

issues facing the UK today are the cost of living crisis, the ongoing

challenges within the NHS, and the climate crisis. I also believe we need to

address wealth inequality and invest in infrastructure and green technologies

to secure a sustainable future for generations to come.- Adapt the

promptabove, changing some of the characteristics of the individual. You might make them a different age, or give them a different voting history, or something else. How does this affect the generated text?

Reveal code

# Define the prompt to send to the model

prompt <- "Write a short paragraph in the first person,

explaining your political beliefs.

You are a 22-year-old woman from the UK

with a highest qualification of GCSEs.

You voted for the Conservatives in the last election.

Do not describe yourself or your background --

focus only on your political beliefs.

In particular, describe the values that guide your

beliefs and the issues you think are most

important facing the country today."

# Generate output

output_2 <- run_interaction(prompt)Honestly, I'm pretty straightforward when it comes to politics. I believe in

hard work and personal responsibility – if you put the effort in, you should be

able to get ahead. That's why I voted Conservative last time. I think they're

best placed to manage the economy and keep things stable, which is important

for everyone, you know? I value things like law and order, and I want to see

strong borders to protect our country. The cost of living is definitely the

biggest worry right now, and I hope the government can get a grip on things and

make life a bit easier for ordinary people like me.The LLM clearly seems to think that a 22-year old who voted Conservative and whose education only went to GCSE level would have quite different political beliefs to the 38-year old Labour voter from before. This makes sense, particularly given that past vote is likely to be strongly predictive of political attitudes.

10.6 Creating prompts programmatically

Rather than manually editing the prompt each time we want to generate a response for a different synthetic sample, a more efficient approach would be to define a function which constructs the appropriate prompt from a given set of inputs.

Create a function which takes four arguments:

age– the age of the hypothetical individualgender– the gender of the hypothetical individualeducation– the education of the hypothetical individualvote– the vote of the hypothetical individual

Use the

paste0()function to construct the prompt such that the arguments defined above are put in the correct place in the surrounding prompt text. Your function should return adata.framewhich includes the characteristics of the individual as well as the prompt you have created.

Reveal code

generate_prompt <- function(age, gender, education, vote){

prompt <- paste0(

"Write a short paragraph in the first person, explaining your political beliefs. ",

"You are a ", age, "-year-old ", gender, " from the UK",

", with a highest qualification of ", education,

". You voted for ", vote, " in the last election. ",

"Do not describe yourself or your background—focus only on your political beliefs. ",

"In particular, describe the values that guide your beliefs and the issues you think are most important facing the country today."

)

out <- data.frame(age, gender, education, vote, prompt)

return(out)

}- Use the function you just created to create a prompt for an individual with some characteristics of your choice.

Reveal code

[1] "Write a short paragraph in the first person, explaining your political beliefs. You are a 65-year-old male from the UK, with a highest qualification of degree. You voted for Reform in the last election. Do not describe yourself or your background—focus only on your political beliefs. In particular, describe the values that guide your beliefs and the issues you think are most important facing the country today."- Send your newly constructed prompt to the Gemini API and inspect the response. Does it make sense?

Reveal code

I believe in hard work and personal responsibility. I think everyone should

have the opportunity to get ahead, and that means a strong economy where

businesses can thrive and create jobs. That’s why I voted Conservative last

time – I think they’re best placed to manage the economy and keep taxes down,

so people can keep more of what they earn. I also value a strong sense of

community and tradition. Keeping our borders secure is really important to me,

and I think we need to support our armed forces and protect our heritage. The

biggest issues we face right now are the cost of living and making sure we have

enough good jobs. We need to get inflation under control and invest in skills

training, so young people like me have the chance to build a good life.Again, it looks like the LLM sees an individual with these characteristics as having distinct political beliefs.

10.7 Generating “silicon” samples

In the previous sections, we manually experimented with prompting an LLM to generate political statements from hypothetical individuals with different characteristics. While this approach works well for single examples, in real social science research we often require larger synthetic datasets to study patterns systematically. Questions 6, 7, and 8 guide you through a more systematic approach to creating these datasets.

- First define a set of demographic characteristics that you want to vary across your simulated respondents. Store each set of characteristics as a separate vector, whose elements include the relevant values of the hypothetical characteristics.

Reveal code

- Generate a synthetic dataset containing a set of individuals whose characteristics are sampled at random from the vectors that you defined above. That is, each individual in your data should have a random age, gender, education and past vote. Construct your data for no more than 50 individuals.

Reveal code

- Write a for-loop which a) generates a bespoke prompt for each of the synthetic individuals that you created in the questions above, b) sends that prompt to the gemini API, and c) saves the LLM-generated text in the

textvariable in the data.frame you created above. I’ve written some starter code for you below, but you will need to fill in the gaps.3

3 Note that there are rate limits on the Gemini API. For the Gemini 2.0 Flash-Lite model that we are using, there is currently a limit of 30 requests per minute and 1500 requests per day. In order to prevent us going over the requests per minute limit, I have included the Sys.sleep(2.2) command in the for-loop. This includes a 2.2 second pause in each iteration of the loop, ensuring that we don’t make more than 30 requests in any 60 second period.

Reveal code

i<-1

## Loop over rows of the silicon_sample data

for(i in 1:nrow(silicon_sample)){

# Pause so as to avoid making too many API requests

Sys.sleep(2.2)

# Generate prompt for the relevant row of the silicon_sample data

# Generate response from Gemini API

# Store the output in the relevant row of the silicon_sample data

}

# Save the output- Once you’ve generated your synthetic sample, take some time to explore the data:

- Are the responses coherent and plausible?

- Do they vary in tone, content, or emphasis based on demographics?

- Are there recurring phrases or values that appear frequently?

10.8 Homework

- Presuming that you managed to collect the responses from gemini above, you should now do some analysis to demonstrate how gemini describes variation in political attitudes across one of the characteristics in the data. That is, using one of the methods we have studied on the course, tell me something interesting about the variation in political beliefs that gemini believes to exist between voters of different parties, or voters of different ages, or different educational backgrounds, and so on. Create one visualisation that communicates your finding and upload it to this Moodle page.